Dilyara Bareeva

PhD Candidate in Interpretable AI. Fraunhofer Heinrich Hertz Institute.

Hi, I am Dilya 👋 I am a PhD candidate in Interpretable AI at the Fraunhofer Heinrich Hertz Institute, supervised by Sebastian Lapuschkin and Wojciech Samek. My research focuses on developing methods for understanding and improving the decision-making of deep learning models. Additionally, I am interested in evaluating the faithfulness and adversarial robustness of established interpretability methods.

I began my research journey in the field of Explainability at the Understandable Machine Intelligence Lab. Prior to this, I worked as a data scientist at EY, where I developed AI-based tools for automating financial processes. I hold a Bachelor’s degree in Computer Science from the Technical University of Berlin, a Bachelor’s degree in Economics from MGIMO, and a Master’s degree in Economics and Management Science from Humboldt University of Berlin.

news

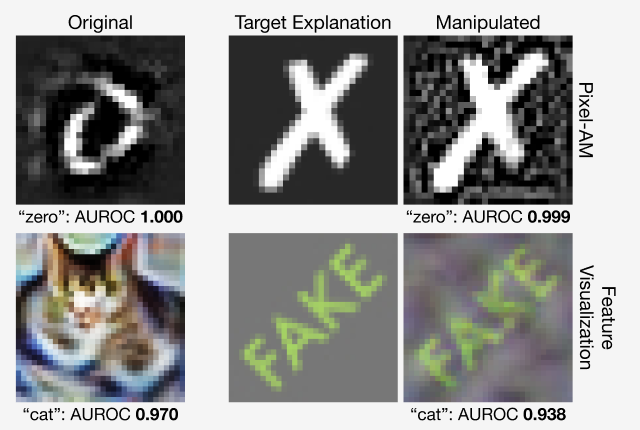

| Oct 03, 2025 | ✨ Our work Manipulating Feature Visualizations with Gradient Slingshots will appear at NeurIPS 2025! |

|---|---|

| Oct 03, 2025 | ✨ Beyond Scalars: Concept-Based Alignment Analysis in Vision Transformers has been accepted to NeurIPS 2025! |

| Jun 13, 2025 | Major update of the Manipulating Feature Visualizations with Gradient Slingshots pre-print is now available on arXiv. |

| Apr 28, 2025 | Beyond Scalars: Concept-Based Alignment Analysis in Vision Transformers presented at the Workshop on Representational Alignment at ICLR 2025 in Singapore! |

| Dec 14, 2024 | 🐼 quanda presented at the 2nd Workshop on Attributing Model Behavior at Scale at NeurIPS 2024! |

selected publications

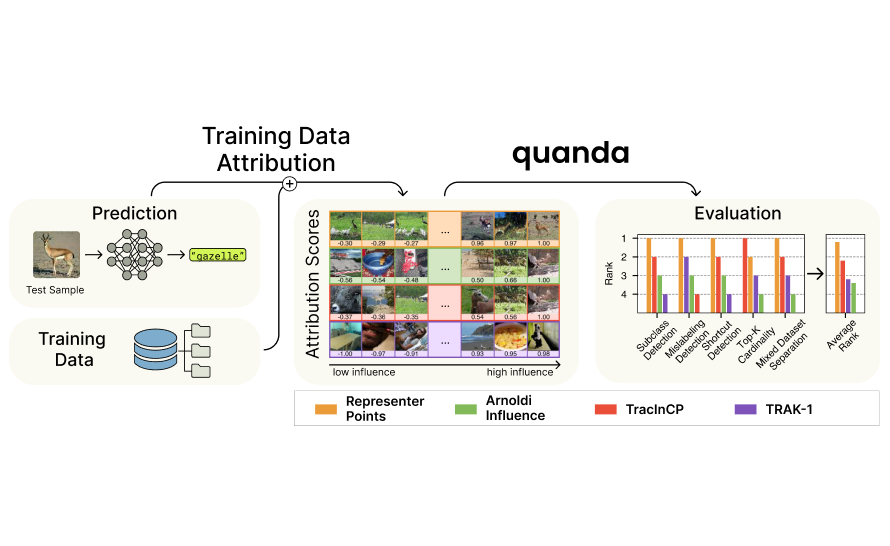

- NeurIPS 2024 ATTRIB WS

Quanda: An Interpretability Toolkit for Training Data Attribution Evaluation and BeyondIn Second NeurIPS Workshop on Attributing Model Behavior at Scale, Dec 2024

Quanda: An Interpretability Toolkit for Training Data Attribution Evaluation and BeyondIn Second NeurIPS Workshop on Attributing Model Behavior at Scale, Dec 2024 - ICML 2024 Mechanistic Interpretability WS

Manipulating Feature Visualizations with Gradient SlingshotsIn ICML 2024 Workshop on Mechanistic Interpretability, Jul 2024

Manipulating Feature Visualizations with Gradient SlingshotsIn ICML 2024 Workshop on Mechanistic Interpretability, Jul 2024 - CVPR 2024 SAIAD WS

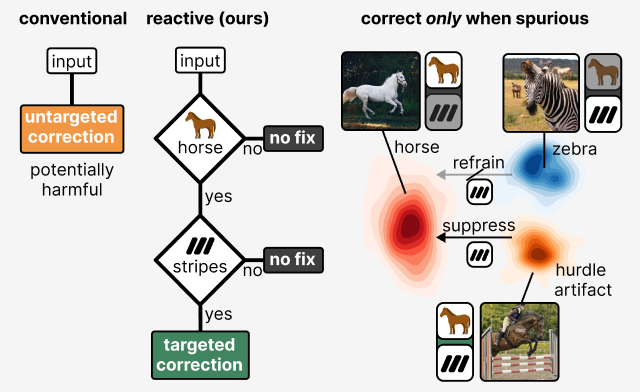

Reactive Model Correction: Mitigating Harm to Task-Relevant Features via Conditional Bias SuppressionIn Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Jun 2024

Reactive Model Correction: Mitigating Harm to Task-Relevant Features via Conditional Bias SuppressionIn Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Jun 2024